This series of posts discusses some basic ideas in data mining . For all posts in the series see here.

I got lot of useful feedback on my post on K-means. Most common were to discuss the basic intuition in more detail and to include more figures to clarify the ideas . Thanks people for those comments !

Topic and Organization

In this post , I will discuss about Principal Component Analysis aka PCA , a very popular dimensionality reduction technique. It has an amazing variety of applications in statistics, data mining, machine learning, image processing etc

PCA is a huge topic to cover in a single post. So I plan to write it as a trilogy – First post will talk about PCA in lower dimensions with emphasis on geometric intuition and application to compression. Second post will extend it to arbitrary dimensions and discuss about basic dimensionality reduction , and the two common algorithms for calculating PCA. Third post will discuss Eigenfaces , which is probably one of the coolest application of PCA where we use it for face recognition.

Motivation for PCA

Before discussing PCA per se, let us discuss the reasons why PCA is one of the most important technique in data mining / machine learning. In this post I will primarily discuss with respect to compression. In the next post, we will discuss the more general idea of dimensionality reduction.

Let us assume that we have some arbitrary process that generates (x,y) points in 2 dimensional space . We do not know the behavior/internals of the process but we suspect that there is some inherent relation between x and y. There can be many reasons for trying to know the internal behavior. For eg if we know the relation between x and y, then we need to store only x and the transformation function. For eg if we had 1000 points in 2 dimensions , then we need 2000 words to store them (assuming each number takes word to store ,which is 4 bytes) . But if we knew the relation between x and y, we only need to store 1000 numbers (ie only x) which will occupy 1000 words as we can recalculate y from x using the transformation function. We get a 50% compression !

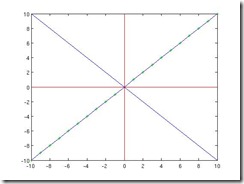

Let us take an example. Assume that our unknown process, generated some points which when plotted , we get the following graph.

The red lines are the x-y axes and the green crosses are the points generated by the process. The relation between x and y is obvious to us now. The internal logic is . Now if we just store x, then we know how to get y. Some other examples of possible functions are

(Celsius to Fahrenheits) or

( miles to kilometers) . These two examples are slightly not very obvious but still we can see that x and y are related by some transformation function. PCA works best when this transformation function is linear.

are all linear but

is not.

Let us discuss the example in slightly more detail. In this simple example, we were able to easily identify the transformation function as the data was in 2 dimensions. In 2 dimensions, the only valid linear transformation is that of a line. But in higher dimensions it will be a (hyper)plane and hence finding the exact linear transformation might be hard to do it just by eyeballing the data. In fact, even visualizing the data in higher dimensions is a big problem. Hence we need a more algorithmic way to find the relation.

Short Detour To Linear Algebra : Vector Spaces and Basis

Basis is one of the fundamental ideas in Linear Algebra. I will give a short discussion of basis here – for more details consult some good book on Linear Algebra or some video lectures on it. For more details on learning it see my post on Linear Algebra .

Before discussing basis, let us talk about Vector Spaces. Intuitively, a vector space is a collection of vectors . It has the additional property that if you take any two vectors in it and add them, the result is also in the original collection. Similarly, if you multiply any vector by a scalar , the result is also in the original collection. So we can say that vector space is a collection of vectors that are "closed" under vector addition and scalar multiplication. A subspace is a similar idea. A subspace is subset of vectors in the original vector space but also have the behavior of a vector space. ie being closed under vector operations.

If the above paragraph sounded very abstract , it isn’t. You can consider the set of all real values vectors representing points in 2 dimensions as vector space. It is represented as . If you take any two vectors and add them the result is still in

. Same goes for scaling.

Now that we have discussed vector spaces, let us talk about Basis. Basis is again a set of vectors. We say that B is a Basis for a Vector Space V , if all the vectors in vector space V can be expressed as a linear combination of the vectors in the basis,B . So the vectors in the basis can express every vector in the vector space as a linear combination of themselves but no vector in a basis can be expressed as linear combination of other vectors in a basis. Wikipedia concisely states this fact – Basis is a "linearly independent spanning set."

The idea of Basis might sound confusing, but an example might clear it. Let us consider all the real valued 2-dimensional points represented by . The simplest basis (aka standard basis ) for

that corresponds to the x-y axes are

We can notice several things in this basis.

a) All the vectors in can be represented as a linear combination of

and

.

b) The vectors and

are the columns of the identity matrix. Also the two vectors are orthogonal. (ie the vectors are perpendicular to each other)

c) More interestingly, the two vectors and

are also orthonormal (orthogonal vectors with unit length)

d) We can also notice that this basis corresponds to the standard x-y axes.

An important thing to note is that a vector space might have more than one basis. There are common techniques like Gram-Schmidt to generate Orthogonal sets of vectors. For eg , other valid basis for are :

Change Of Basis

So why did we discuss basis so much ? The reason is that PCA can be considered as a process of finding a new basis which is a linear combination of the original basis such that the inherent structure of points becomes more clear. Most of the time the standard basis (which is the x-y axes) makes a lousy basis. Again, let us see a picture to clarify this idea.

This image is very similar to the previous image. As before, the red lines are the x-y axes and the green crosses are the points. But what are the blue lines ? They are two axes which are perpendicular to each other but have the nice property that they bring out the internal structure more clearly. In other words, they are the new basis. Let us refer them as and

.

How is this new basis related to the original basis ? We get the new basis by rotating the original basis by 45 degrees. Remember, rotation, stretching and shrinking of the constituent vectors are all valid linear transformations.

Why is this new basis better than the old one ? Because , in this new basis the original point become (1,0), (2,0) and so on. So we only need one number to represent a point instead of the two in the original basis. We can also think that we have projected points in 2-dimensions to 1-dimension and have got the benefits of compression ! But we know that we can still get all the points back if needed.

A Dose of Real World

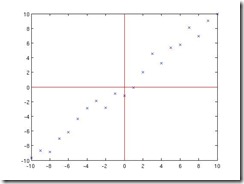

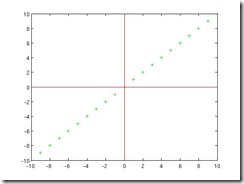

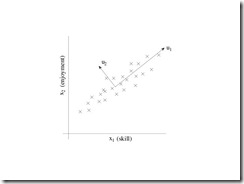

So far we have been living in the ideal world where the sensors gave us perfect data. But in real world , the sensors usually give the data with some noise. So let us take a look at the plot where a gaussian noise is added to the points.

So whatever we discussed above still holds but with slight modifications. Since the data was in 2 dimensions, to get a compression we need to project the points to 1 dimension. So we have to choose to project the points onto one of the axes (basis). How do we select the which axis to project on ? Since the points have noise in them , we need to project the points on to the axis which minimizes the projection error. Let us consider both the cases :

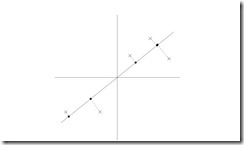

[Image Credit : The following 3 images are obtained from Stanford Prof Andrew Ng‘s Machine Learning course : Lecture notes on PCA ]

Case 1: Here we project on the first vector(axis) . The image with the projection error is here.

Case 2: Here we project on the first vector(axis) . The image with the projection error is here.

Which projection did you prefer ? I am sure you would have preferred the first projection intuitively. Algorithmically too that is the correct answer. The projection onto vector had lesser projection error than the projection onto vector

. In short, PCA selects a basis which is a linear combination of the original basis which also minimizes the projection error. How it does that , we will discuss in the next post.

Alternate View Of PCA

So far we have discussed PCA in terms of change of basis. There is an alternate way of looking at it . This way is slightly less intuitive but is very useful when you want to prove the optimality of PCA or derive formulas for PCA. The new idea is to look at the variance.

Let us take a look at the points again.

We have to select one of the axis onto which we want to project the points. Which axis should you select ? From the image , vector is in the direction of higher variance than vector

. Hence we need to select projection over

because that projection tries to maintain more information about the original points than the projection onto

. Please look at the images of the actual projection onto

and

given above. Projection on

is bad as some points in the original data might be projected onto a same point in the new axis.

An alternate reasoning is that the original points will be along the direction of highest variance and the noise will be along the direction of the lesser variance. This is because, noise by definition is usually much smaller compared to original data points. Since lies along the direction of highest variance it must lie on the best fit line .

being smaller gives the direction of the noise. ( What happens if the noise is too high ? PCA will still work but with very high projection error )

There is a formal proof that shows that minimizing the projection error and maximizing the variance are equivalent. We will skip the proof here.

Summary

The important points in this post are the following :

1. The standard basis is usually a bad one to store data.

2. PCA tries to find a new basis such that the projection error is minimized.

3. Alternate way to look at PCA is that it finds a vector to project which is in the direction of the highest variance.